GoPro Visual-Inertial SLAM

high definition inexpensive mapping with action cameras

Publications

-

B. Joshi, M. Xanthidis, S. Rahman, and I. Rekleitis, “High Definition, Inexpensive, Underwater Mapping,” In IEEE International Conference on Robotics and Automation (ICRA), 2022

-

B. Joshi, M. Xanthidis, M. Roznere, and N. J. Burgdorfer, P. Mordohai, A. Q. Li, and I. Rekleitis, “Underwater Exploration and Mapping,” In IEEE/OES Autonomous Underwater Vehicles Symposium (AUV), 2022

The imaging technology of action cameras is producing stunning videos even in challenging conditions of the underwater domain. Using an action camera as a sensor for visual SLAM provides two benefits; first, they provide better image quality and second, it does not require a water-tight enclosure along with any necessary circuitry, in effect diminishing the barrier to entry for visual SLAM underwater. GoPro camera provides high-definition video along with synchronized IMU data stream encoded in a single mp4 file; paving the way to be used as a standalone visual-inertial sensor. To enhance real-time mapping of underwater structures, the SVIn2 SLAM framework is augmented so that, after loop closures, the map is deformed to preserve the relative pose between each point and its attached keyframes producing a consistent global map.

GoPro as Visual-Inertial Sensor

The GoPro 9 consists of a color camera, an IMU, and a GPS. GPS does not work underwater; thus it was not used in this work. However, GPS information can be fused with Visual Inertial Navigation Systems (VINS) during above-water operations.

The GoPro 9 is equipped with Sony IMX677, a diagonal 7.85mm CMOS active pixel type image sensor with approximately 23.64M active pixels. GoPro 9 can run at 60 Hz at a maximum resolution of 4K. GoPro 9 also includes a Bosch BMI260 IMU equipped with 16-bit 3-axis MEMS accelerometer and gyroscope. GoPro 9 inherently records IMU data at 200Hz. The timestamps of IMU and camera are synchronized using the timing information from metadata encoded inside the MP4 video. GoPro 9 also includes a UBlox UBX-M8030 GNSS chip capable of concurrent reception of up to 3 GNSS (GPS, Galileo, GLONASS, BeiDou) and accuracy of 2 m horizontal circular error probable, meaning 50% of measurements fall inside the circle of 2 m. GoPro 9 records GPS measurements at ≈18 Hz.

| Sensor | Type | Rate | Characteristics |

|---|---|---|---|

| Camera | Sony IMX677 | 60 Hz | max. 5599×4223, RGB color mosaic filters |

| IMU | Bosch BMI260 | 200 Hz | 3D Accelerometer & 3D Gyroscope |

| GPS | UBlox UBX-M8030-CT | 18 Hz | 2m CEP Accuracy |

We found that the SuperView mode generates non-linear distortions that thwart the calibration of the camera’s intrinsic parameters during data collection. The videos were recorded at full High Definition (HD) resolution of 1960×1080 with wide lens setting: horizontal field-of-view (FOV) 118°, vertical FOV 69°, and hypersmooth level set to off.

GoPro Telemetry Extraction

The GoPro 9 MP4 video file is divided into multiple streams namely video encoded with H.265 encoder, audio encoded in advanced audio coding (AAC) format, timecode (audio-video synchronization information), GoPro fdsc data stream for file repair, and GoPro telemetry stream in GoPro Metadata Format referred as GPMF. GPMF is divided into payloads, extracted using gpmf-parser, with each payload containing sensor measurements for 1.01 seconds while recording at a frame rate of 29.97Hz. Particular sensor information is obtained from the payload using FourCC–7-bit 4-character ASCII key, for instance, ’ACCL’ for the accelerometer, ’GYRO’ for the gyroscope, and ’SHUT’ for shutter exposure times. Using the start and end of payloads along with the number of measurements in that payload, we interpolate the timing of all measurements. We decode the video stream and extract images from the MP4 file using the FFmpeg library and combine them with the IMU measurements using timing information from the GPMF payload.

Calibration

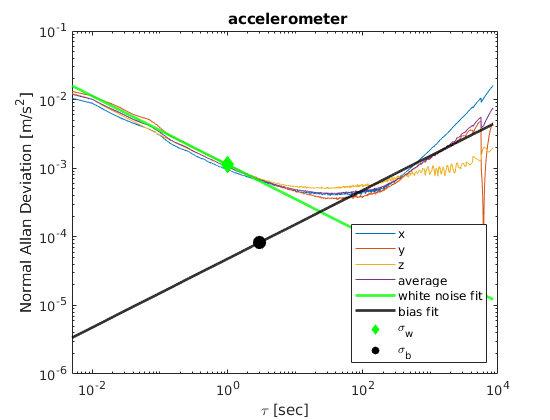

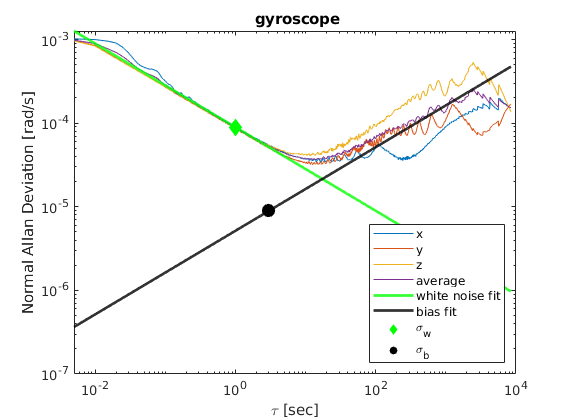

Firstly, we calibrate the camera’s intrinsic parameters. We use the camera-calib sequences where we move the GoPro 9 camera in front of a calibration pattern placed in an indoor swimming pool from different viewing angles. The intrinsic noise parameters of the IMU are required for the probabilistic modeling of the IMU measurements used in state estimation algorithms and the camera-IMU extrinsic parameters. We assume that IMU measurements (both linear accelerations and angular velocities) are perturbed by zero-mean uncorrelated white noise with standard deviation σw and random walk bias, which is the integration of white noise with standard deviation \(σ_b\) . To determine the characteristics of the IMU noise, the Allan deviation plot \(σ_{Allan}(τ)\) as a function of averaging time needs to be plotted. In a log-log plot, white noise appears on the Allan Deviation plot as a slope with gradient − 1/2 and \(σ_w\) can be found at τ = 1. Similarly, the bias random walk can be found by fitting a straight line with slope 12 at τ = 3.

Global Mapping

Most VIO packages, when applying loop closure update only the pose graph, leaving the triangulated features in their original estimate. COLMAP is a global optimization package, and the final result optimizes both poses and feature 3D locations. We run COLMAP by using 2 images per second, resulting in 2000 to 3000 images in cavern and cave sequences, which takes on average 7 to 10 hours. To achieve real-time global mapping, we enhance the SLAM framework to update the 3D pose of the tracked features every time loop closure occurs in order for the 3D features to be consistent with the pose-graph optimization results. For each keyframe f, the VIO module passes the pose of keyframe in world coordinate system \(T_{wf}\) and 3D position of landmarks in the world frame \(P_w\). For each 3D point, we calculate its local position in keyframe as \(P*f = T*{wf}\*P*w\). In the event of loop closure, we deform the global map so that the relative pose between each point and its attached keyframes remains unchanged. It should be noted that \(P_f\) is expressed as the relative position with respect to the keyframe, and whenever the pose of keyframe \(T*{wf}\) changes due to loop closure updates, the location of the 3D landmarks in the global frame also changes accordingly; producing a globally consistent map. Since we need constant time per point, global map building scales linearly O(n) with the number of points in the scene.

Datasets

The GoPro9 Underwater VIO dataset consists of calibration sequences in addition to odometry evaluation sequences recorded using 2 GoPro 9 cameras. The two cameras are referred to as gi , i ∈ [1, 2]. Datasets can be categorized as:

- camera-calib: for calibrating the camera intrinsic parameters underwater. We provide calibration sequences using two calibration patterns: a grid of AprilTags and a checkerboard for each camera.

- camera-imu-calib: for calibrating the camera-IMU extrinsic parameters in order to determine the relative pose between the IMU and the camera. The camera is moved in front of the Apriltag grid exciting all 6 degrees of freedom.

- imu-static: contains IMU data to estimate white noise and random walk bias parameters. These sequences are recorded with the camera stationary for at least 4 hours for each camera.

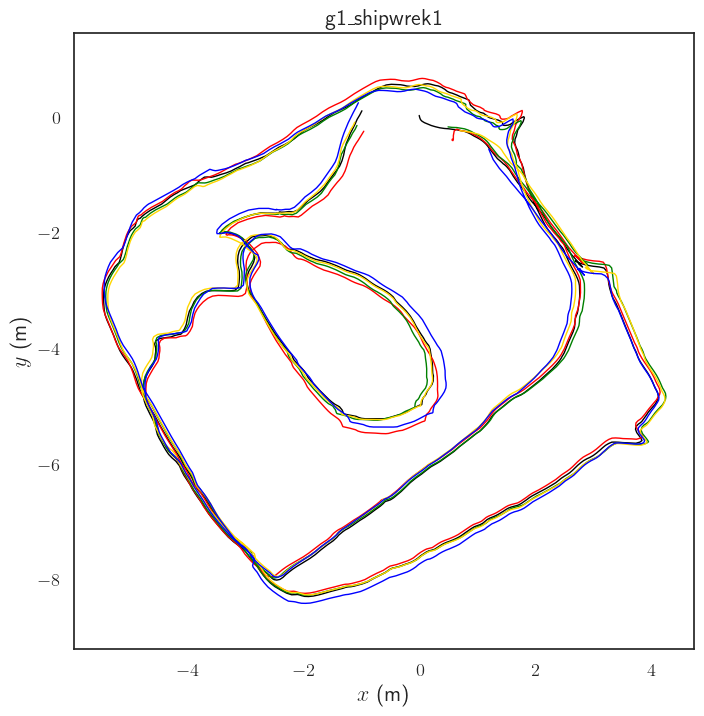

- shipwreck: two sequences collected by handheld GoPro 9 on an artificial reef (refueling barge wreck) 55 Km outside of Charleston, SC, USA.

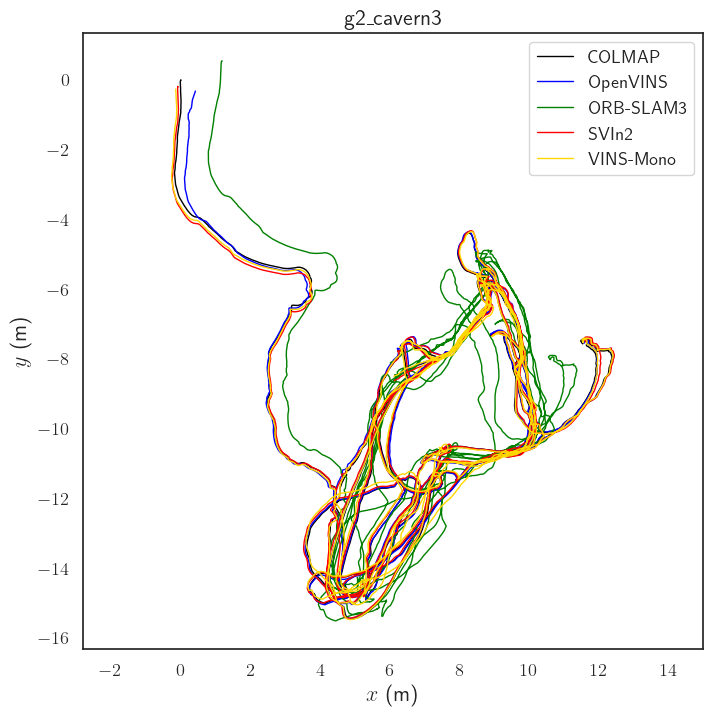

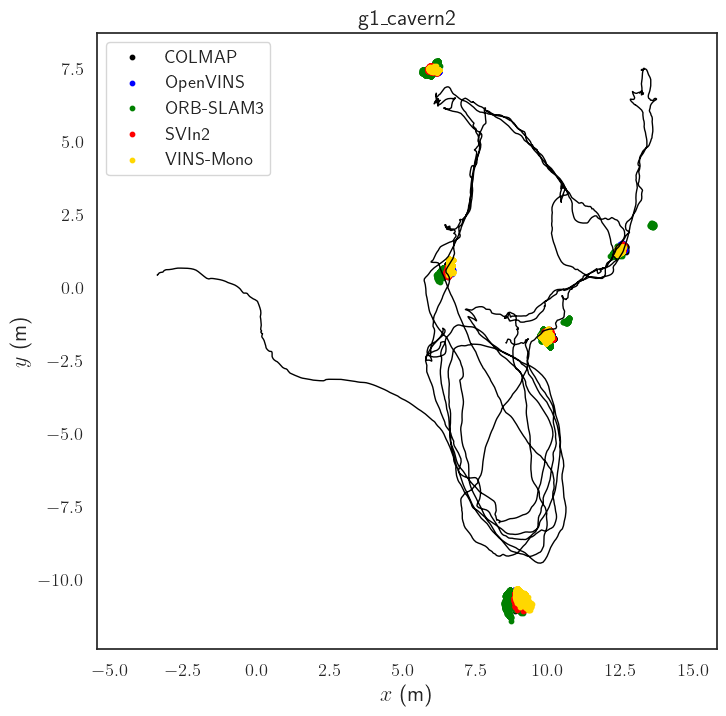

- cavern: three trajectories traversed inside the ballroom cavern at Ginnie Springs, FL. Inside the cavern,five markers (AR single tags) were placed to establish ground truth measurements. Each trajectory consisted of several loops each observing slightly different parts of the cavern but all ensuring the tag of that part of the cavern was visible.

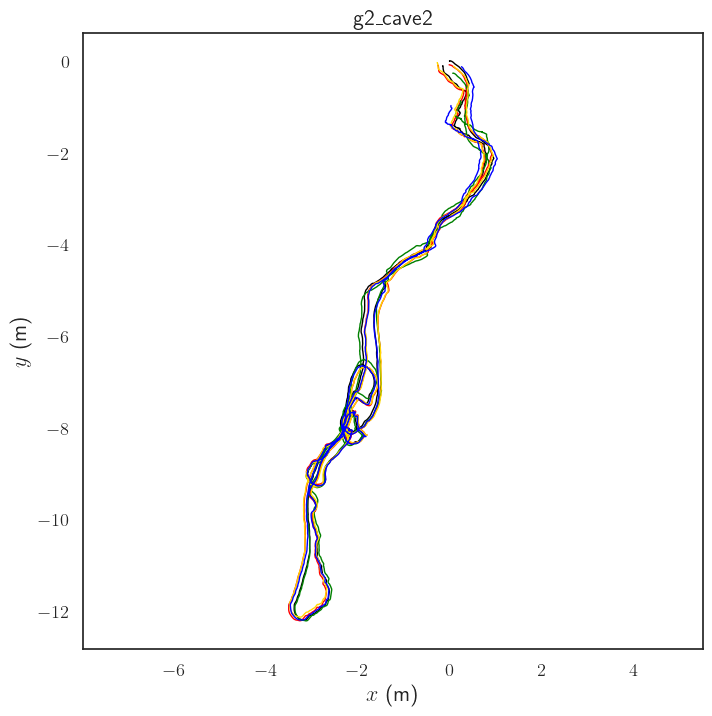

- cave: two sequences were collected at the Devil’s system, FL. For the first sequence the GoPro was mounted on the stereo rig and the second sequence was using only the GoPro.

Results

Due to the absence of GPS in underwater environments or motion capture systems, we use COLMAP to generate baseline trajectories. As monocular SfM inherently suffers from scale observability constraints and global optimization does not converge over large trajectories, we consider the estimated trajectories as accurate up to scale. As COLMAP does not provide an accurate scale, we evaluate the accuracy of the various tracking algorithms using the absolute trajectory error (ATE) metric after sim(3) alignment.

Tracking Results

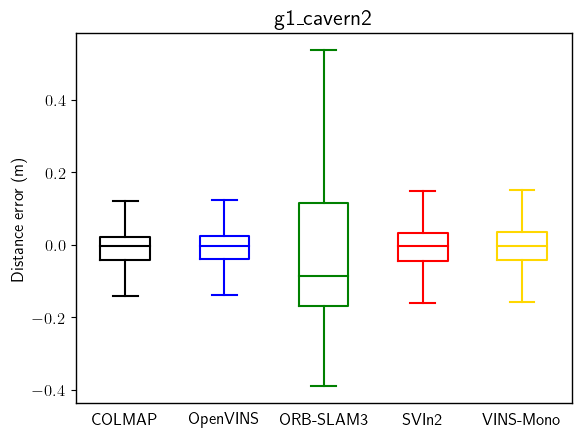

We compare the performance of various open source visual-inertial odometry (VIO) methods on the above described datasets. We evaluate the performance of VINS-Mono, ORB-SLAM3, SVIn2 and OpenVINS based on the RMSE of the ATE. All the compared algorithms are equipped with loop closure resulting in low overall RMSE error. It should be noted that OpenVINS is based on the Mutiple-State Constraint Kalman Filter, whereas all other methods are based on non-linear least squares optimization.

| Sequence | Length [m] | SVIn2 | ORB-SLAM3 | VINS-Mono | OpenVINS |

|---|---|---|---|---|---|

| g1_shipwreck1 | 71.60 | 0.148 | 0.098 | 0.143 | 0.167 |

| g1_shipwreck2 | 79.07 | 0.238 | 0.207 | 0.202 | 0.337 |

| g1_cavern1 | 243.10 | 0.090 | 0.231 | 0.090 | 0.288 |

| g1_cavern2 | 240.60 | 0.074 | 0.363 | 0.097 | 0.084 |

| g2_cavern3 | 341.29 | 0.089 | 0.687 | 0.131 | 0.081 |

| g2_cave1 | 219.58 | 0.072 | 0.150 | 0.105 | 0.081 |

| g2_cave2 | 222.64 | 0.093 | 0.089 | 0.097 | 0.229 |

AR-Tag based Validation

As there is no continuous tracking for absolute ground truth, 3D landmark-based validation with AR tags is used to quantify the accuracy of the evaluated methods. As a part of the experimental setup in the cavern sequences with multiple loops, we placed 5 different AR-tags printed on waterproof paper at different locations inside the cavern. We observe the variance of the position of the AR-tags from their mean position over the whole trajectory length. If the trajectories do not drift over time, the markers must be observed at the same location during multiple visits. Once the relative position from ar*track_alvar is found, the global position can be found as \(T^k*{WM} = T^k*{WC} ∗ P*{CM}\) where \(T^k_{WM}\) is the marker position in world coordinate frame W at time k, \(T^k_{WC}\) is the pose of the camera C in W at time k (produced by SLAM/odometry system), and \(P_{CM}\) is the relative position of marker M from the camera.

Global Mapping

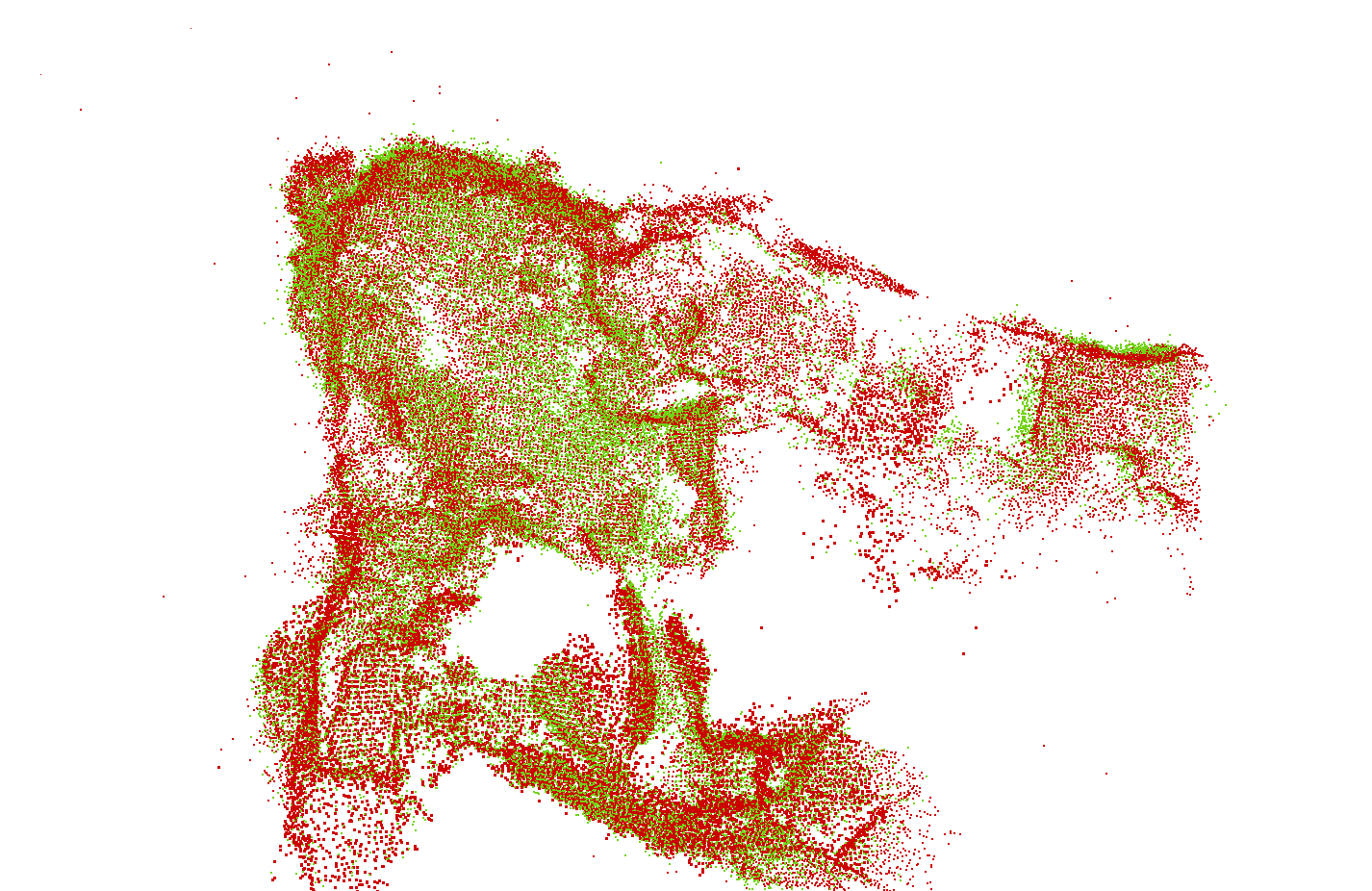

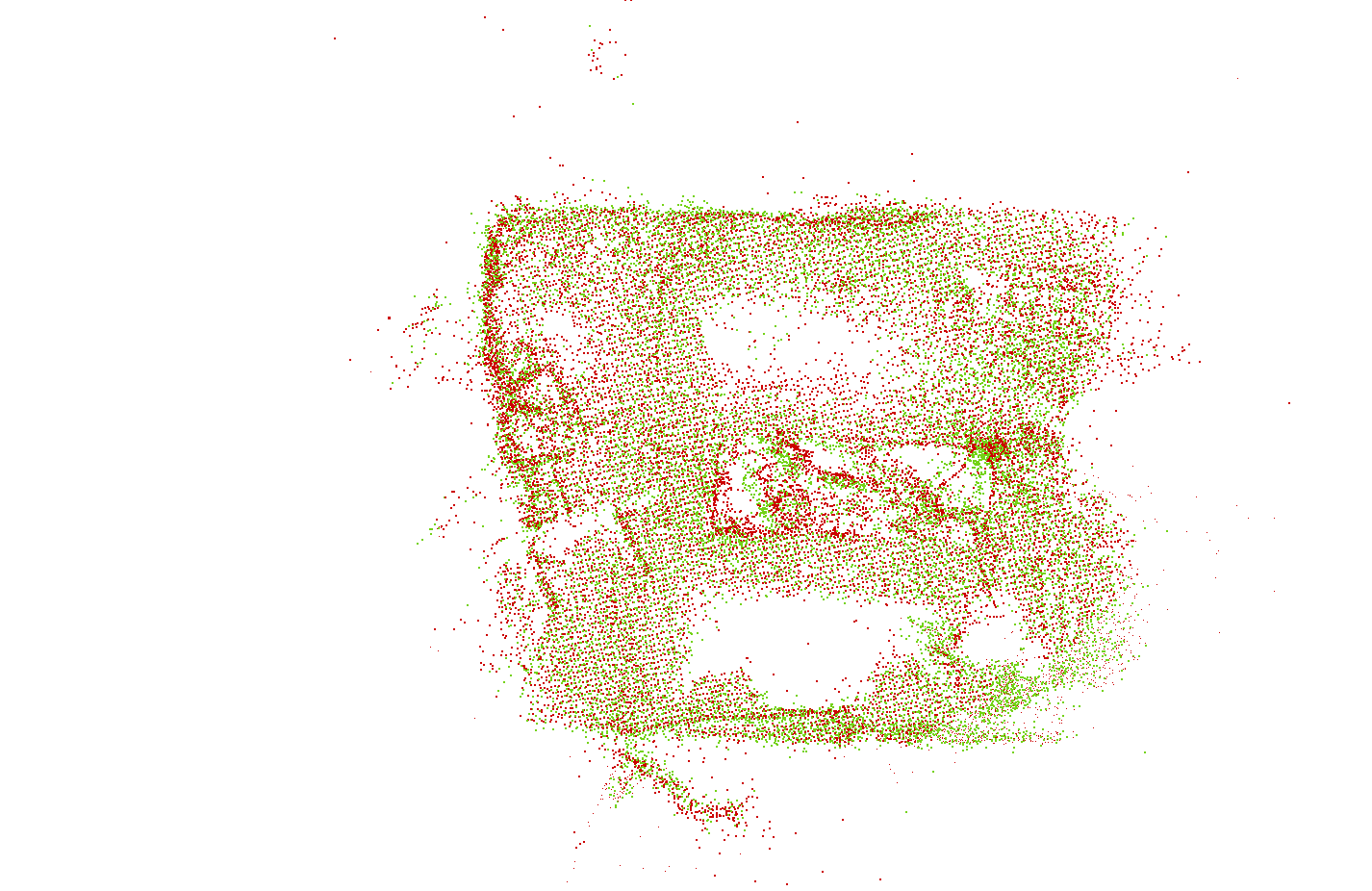

We evaluate the global map produced by enhancing SVIn2 to update the triangulated feature positions after loop closures by comparing them with COLMAP’s sparse pointcloud. The pointclouds come from different sources and differ in size, so we align the pointclouds from COLMAP and SVIn2 as follows:

- Perform voxel downsampling with a voxel size of 10cm.

- Compute FPFH feature descriptor describing local geometric signature for each point.

- Find correspondence between pointclouds by computing similarity score between FPFH descriptors.

- Feed all putative correspondences to TEASER++ to perform global registration finding transformation to align corresponding points.

- Fine tune registration by running ICP over the original point cloud with TEASER++ solution as the initial guess.

| fitness | inlier_rmse | # of correspondences | |

|---|---|---|---|

| g1_shipwreck1 | 0.773 | 0.058 | 12,391 |

| g1_shipwreck2 | 0.500 | 0.063 | 9959 |

| g1_cavern1 | 0.738 | 0.058 | 34,481 |

| g1_cavern2 | 0.727 | 0.060 | 44,797 |

| g2_cavern3 | 0.698 | 0.059 | 46,875 |

| g2_cave1 | 0.107 | 0.067 | 6302 |

| g2_cave2 | 0.326 | 0.064 | 19,750 |

The reconstruction results are compared based on registration accuracy using fitness and inlier_rmse metrics. More specifically, fitness is the ratio of the number of inlier correspondences (distance less than voxel size) and the number of points in SVIn2 pointcloud. Whereas inlier_rmse is the root mean squared error of all inlier correspondences.

Citation

If you find this work useful in your research, please consider citing:

@inproceedings{gopro,

author = {Bharat Joshi and Marios Xanthidis and Sharmin Rahman and Ioannis Rekleitis},

title = {High Definition, Inexpensive, Underwater Mapping},

booktitle = {IEEE International Conference on Robotics and Automation (ICRA)},

year = {2022},

pages={1113-1121},

doi={10.1109/ICRA46639.2022.9811695}

}