Visual-Inertial SLAM Comparison

Publications

B. Joshi, B. Cain, J. Johnson, M. Kalitazkis, S. Rahman, M. Xanthidis, A. Hernandez, N. Karaperyan, A. Q. Li, N. Vitzilaios, and I. Rekleitis, “Experimental Comparison of Open Source Visual-Inertial-Based State Estimation Algorithms in the Underwater Domain,” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019

This project analyses the performance of state-of-the-art visual inertial SLAM systems to understand their capabilities in challenging environments; with focus on underwater. Some of the popular sensors used in indoor/outdoor SLAM (e.g., laser range finder, GPS, RGB-D camera) cannot be used underwater. Underwater environment presents several challenges, including low visibility, color attenuation, dynamic objects such as fish, floating particulates, and color saturation for vision-based state estimation. Thus, we compared the performance of visual-SLAM methods on underwater datasets collected over the years with underwater robots and sensors deployed by the Autonomous Field Robotics Lab.

Open Source Visual-Inertial SLAM Algorithms

We compared the performance of the following open source visual-inertial SLAM algorithms that can be classified based on number of cameras, IMU (if required or not), frontend (direct vs feature-based), backend (filtering vs optimization-based), and loop-closure (present or not).

| Algorithm | # of cameras | IMU? | frontend | backend | loop closure |

|---|---|---|---|---|---|

| DM-VIO | mono | yes | direct | optimization | ✗ |

| DSO | mono, stereo | optional | direct | optimization | ✓ |

| Kimera | mono, stereo | yes | indirect | optimization | ✓ |

| OKVIS | multi | yes | indirect | optimization | ✗ |

| OpenVINS | multi | yes | indirect | filtering | ✓ |

| ORB-SLAM3 | mono, stereo | optional | indirect | optimization | ✓ |

| ROVIO | mono, stereo | yes | direct | filtering | ✗ |

| S-MSCKF | stereo | yes | indirect | filtering | ✗ |

| SVIN | mono, stereo | yes | indirect | optimization | ✓ |

| SVO | mono, stereo | optional | indirect | optimization | ✓ |

| VINS-Fusion | mono, stereo | optional | indirect | optimization | ✓ |

Datasets

All the datasets are collected with a stereo camera (DS UI- 3251LE) at 15 frames per second (fps) and resolution of 1600×1200 and IMU (MicroStrain 3DM-GX15) measurements recorded at 100Hz. The datasets span four underwater environments and are collected using multiple robotic platforms.

| Dataset | Length (in meters) | Duration (in secs) |

|---|---|---|

| Cave | 98.7 | 710 |

| Bus Outside | 67.5 | 585 |

| Cemetery | 95.7 | 434 |

| Coral Reef | 48.0 | 180 |

Results

The trajectories from open-source visual-inertial SLAM algorithms with COLMAP as pseudo-groundtruth after sim(3) alignment based on absolute trajectory error (ATE) and tracking percentage.

\[\begin{align} e_{ATE} =\min_{\boldsymbol{(R,t,s)}\in sim(3)} \sqrt{\frac{1}{n} \sum_{i=1}^{n} \parallel \boldsymbol{p}_i - (\boldsymbol{s R \hat{p}_i + t}) \parallel^2} \end{align}\]Performance of Monocular Inertial SLAM algorithms

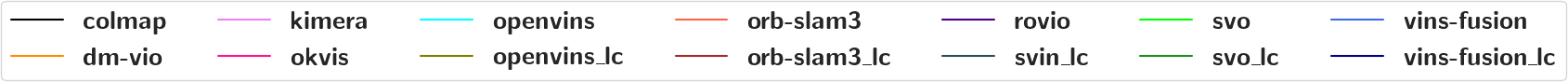

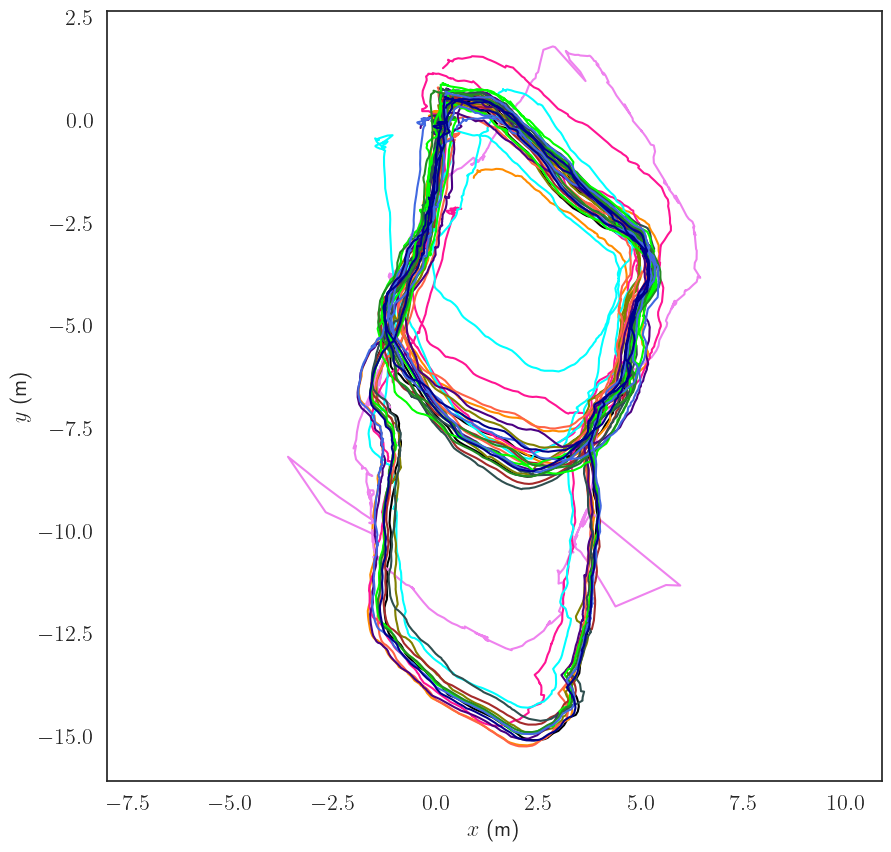

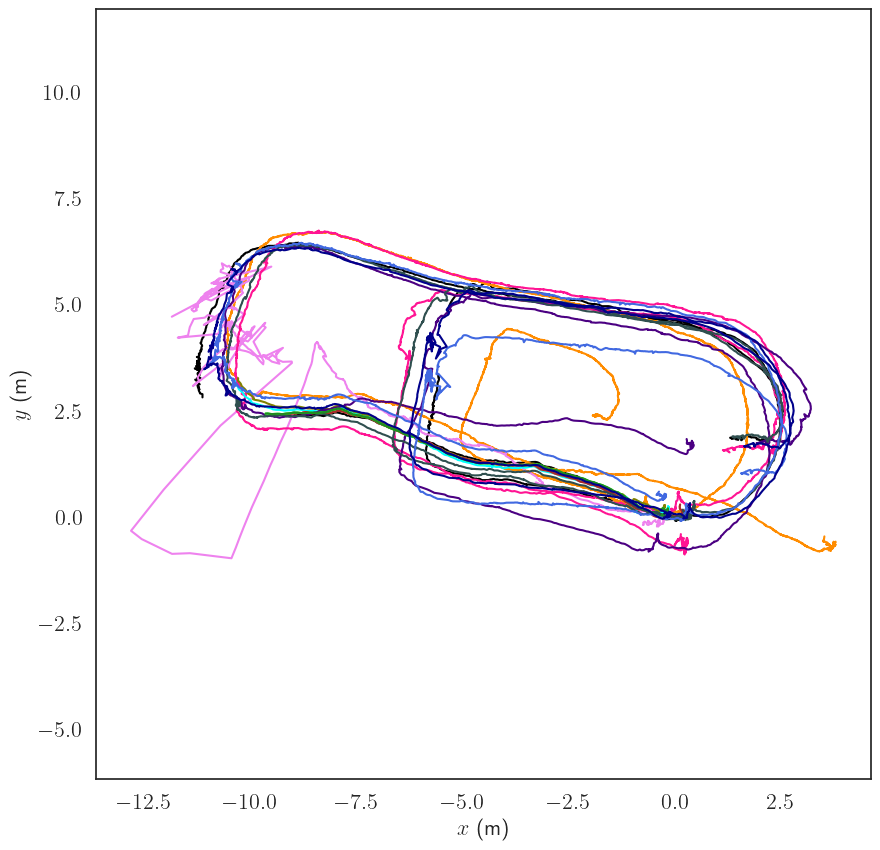

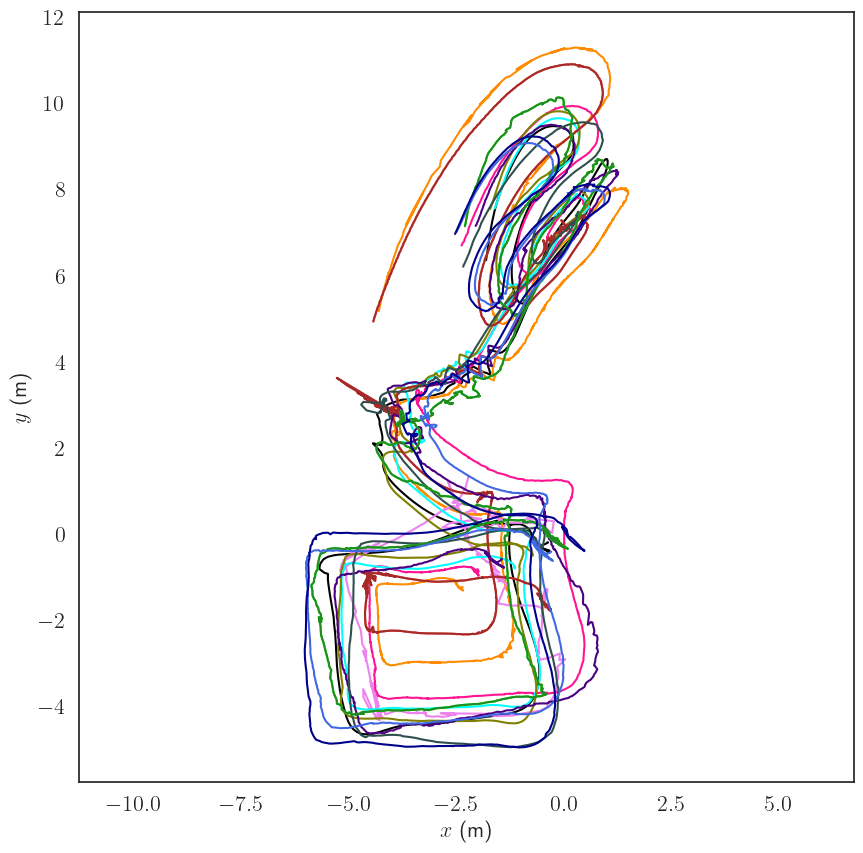

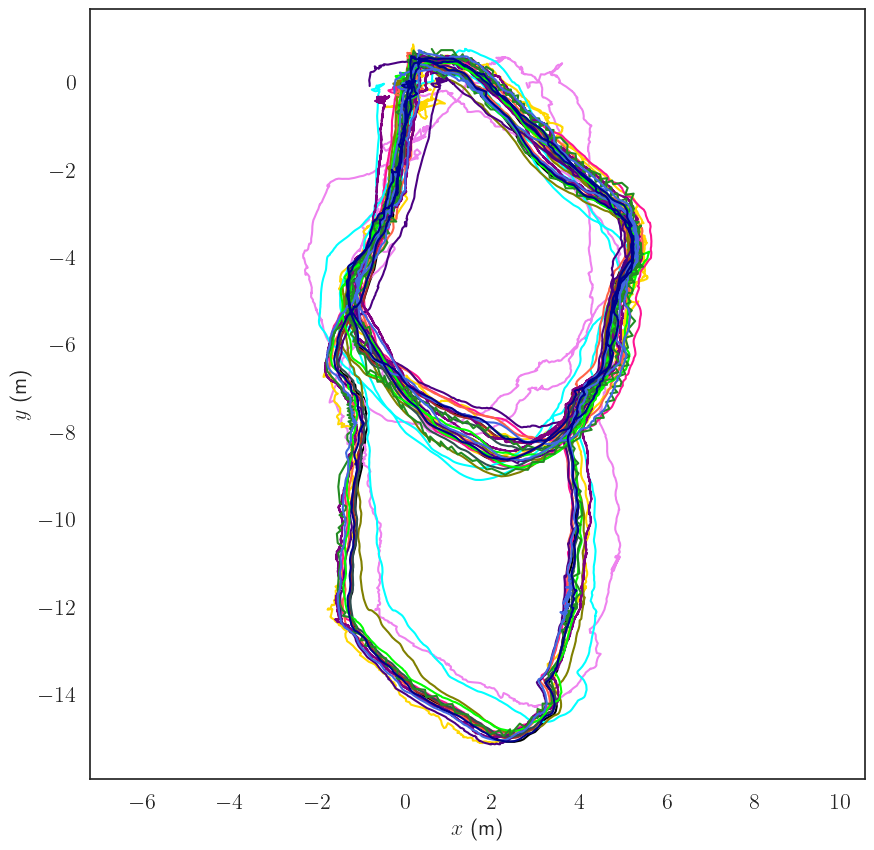

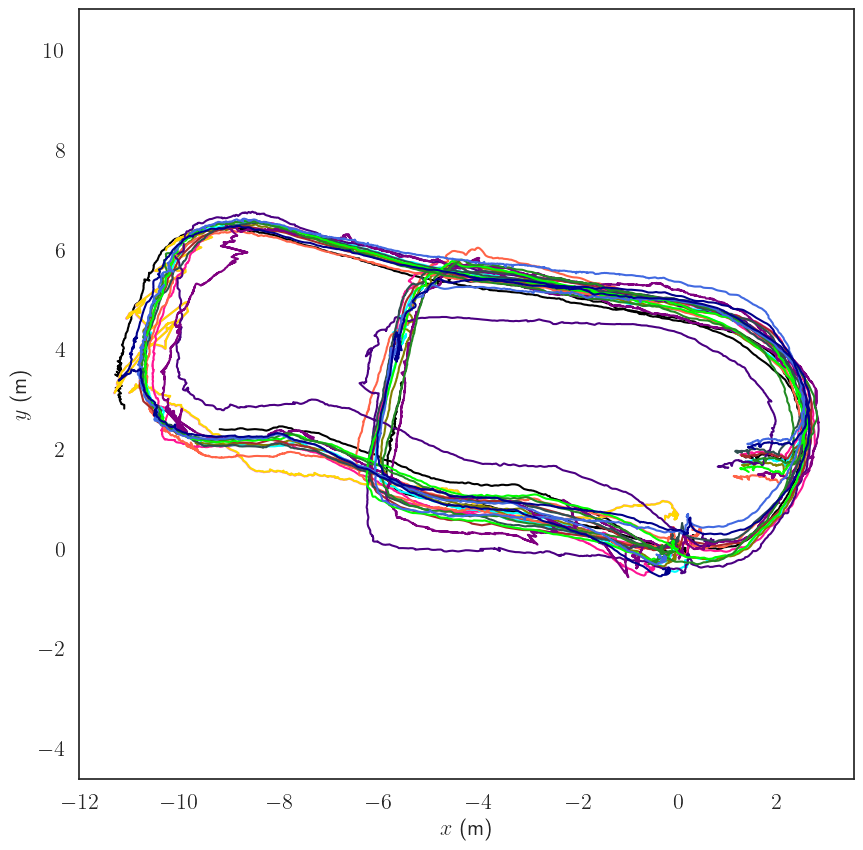

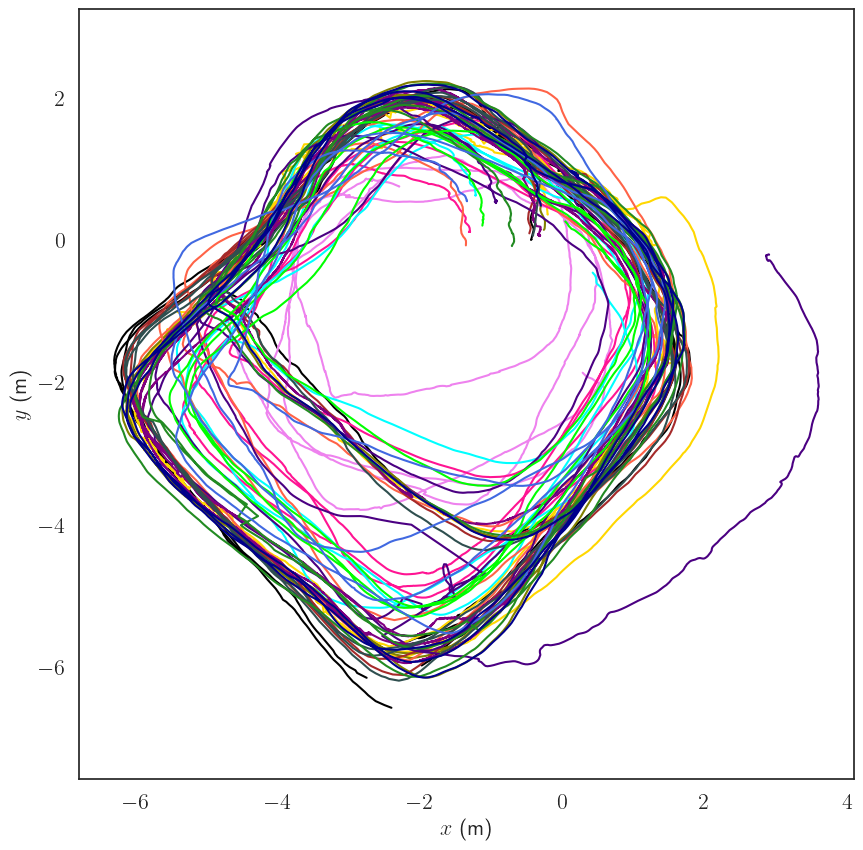

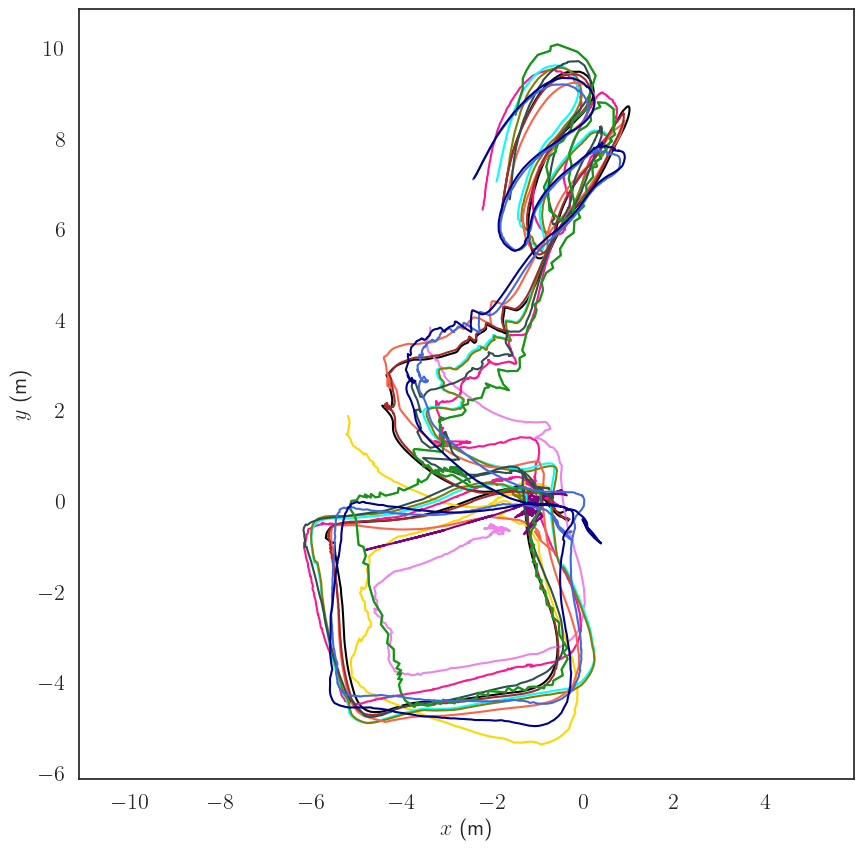

The figures shows trajectories of various monocular visual-inertial SLAM algorithms on underwater datasets.

| dm-vio | kimera | okvis | openvins | openvins_lc | orb-slam3 | orb-slam3_lc | rovio | svin_lc | svo | svo_lc | vins-fusion | vins-fusion_lc | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cave | 0.51 100% | 2.34 49.5% | 1.08 100% | 1.21 99% | 0.36 98.9% | 0.42 99.9% | 0.14 100% | 0.41 100% | 0.30 100% | 0.31 96.8% | 0.25 96.7% | 0.35 99.9% | 0.17 99.6% |

| Bus Outside | 1.81 99.9% | 0.95 33.0% | 0.67 99.9% | 0.2 21.0% | 0.17 20.9% | 0.07 20.4% | 0.07 20.4% | 0.77 99.9% | 0.42 99.9% | 0.14 20.2% | 0.14 20.2% | 0.51 99.7% | 0.31 99.2% |

| Cemetery | 0.33 9.6% | 3.18 100% | 1.72 99.9% | 1.56 98.7% | 0.33 97.6% | 1.87 87.0% | 0.71 83.8% | 1.53 99.9% | 0.31 97.5% | 1.43 89.5% | 0.7 81.0% | 1.6 97.3% | 0.38 96.8% |

| Coral Reef | 1.19 99.2% | 1.31 49.1% | 1.19 99.7% | 0.79 88.7% | 0.73 88.2% | 1.23 98.1% | 0.23 98.1% | 0.75 99.8% | 0.79 99.8% | 0.82 97.9% | 0.82 97.9% | 0.93 99.2% | 0.84 96.4% |

The table above shows performance of various monocular visual-inertial SLAM algorithms. For each dataset, the first row specifies the translation RMSE after sim3 trajectory alignment; the second row is the percentage of time the trajectory was tracked. The best performing algorithm with the lowest RMSE is highlighted in bold for each dataset, and the best loop closure based algorithm is highlighted in blue .

Performance of Stereo Inertial SLAM algorithms

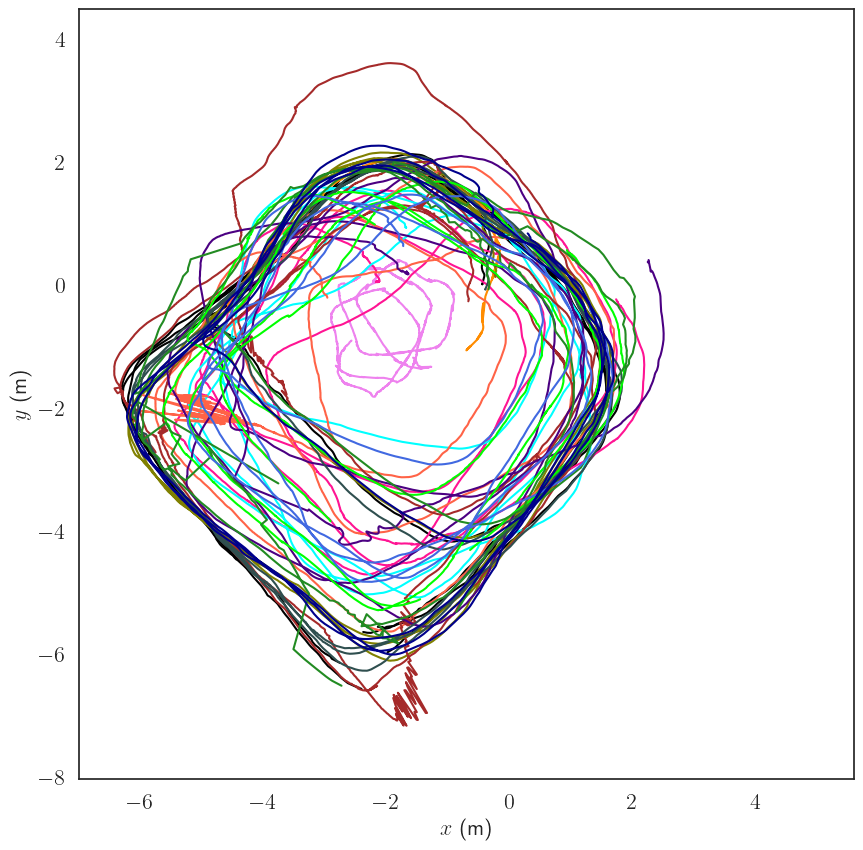

The figures shows trajectories of various stereo visual-inertial SLAM algorithms on underwater datasets.

| kimera | kimera_lc | okvis | openvins | openvins_lc | orb-slam3 | orb-slam3_lc | rovio | s-msckf | svin_lc | svo | svo_lc | vins-fusion | vins-fusion_lc | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cave | 1.52 100.0% | 0.44 99.9% | 0.33 99.9% | 0.67 98.0% | 0.33 98.3% | 0.22 100.0% | 0.05 100.0% | 0.36 100.0% | 0.37 99.7% | 0.09 99.9% | 0.33 98.1% | 0.34 95.3% | 0.27 99.8% | 0.11 99.6% |

| Bus Outside | 0.72 31.3% | 0.72 31.5% | 0.44 99.9% | 0.39 98.6% | 0.32 98.5% | 0.42 100.0% | 0.28 100.0% | 0.81 99.9% | 0.57 99.6% | 0.35 99.8% | 0.36 96.4% | 0.34 96.4% | 0.39 99.7% | 0.39 99.2% |

| Cemetery | 2.28 99.2% | 0.45 99.1% | 1.54 99.9% | 1.37 99.3% | 0.36 97.5% | 1.02 87.8% | 0.22 94.8% | 1.01 99.9% | 0.71 99.5% | 0.32 99.9% | 1.36 97.3% | 0.56 97.3% | 1.31 99.6% | 0.36 97.1% |

| Coral Reef | 1.37 49.1% | 0.93 49.0% | 0.95 99.7% | 0.82 94.2% | 0.81 93.8% | 0.56 93.0% | 0.10 93.0% | 0.79 99.8% | 0.78 21.3% | 0.68 99.8% | 1.05 90.2% | 1.05 90.2% | 0.91 99.2% | 0.87 96.7% |

The table above shows performance of various stereo visual-inertial SLAM algorithms. For each dataset, the first row specifies the translation RMSE after sim3 trajectory alignment; the second row is the percentage of time the trajectory was tracked. The best performing algorithm with the lowest RMSE is highlighted in bold for each dataset, and the best loop closure based algorithm is highlighted in blue .

Takeaways

- Inclusion of IMU data enhances the performance highly

- IMU can propagate state for a few seconds

- Most algorithms fail at similar segments of the trajectory

- Challenging scenarios

- Descriptor Matching Performs generally better than KLT Tracker

- Illumination changes

- Direct Methods sometimes can not solve the optimization problem

- Illumination changes, no photometric calibration

- Optimization based methods perform slightly better than filtering

Citation

If you find this work useful in your research, please consider citing:

@inproceedings{comppaper,

author = {Bharat Joshi and Brennan Cain and James Johnson and Michail Kalitazkis and Sharmin Rahman and Marios Xanthidis and Alan Hernandez and Nare Karaperyan and Alberto {Quattrini Li} and Nikolaos Vitzilaios and Ioannis Rekleitis},

title = {Experimental Comparison of Open Source Visual-Inertial-Based State Estimation Algorithms in the Underwater Domain},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year = {2019},

doi={10.1109/IROS40897.2019.8968049}

}